When you begin an A/B test, you can't help but expect (and want) certain results. You want your beautiful new homepage to convert way better than your old one!

It's an age-old problem haunting high school science fairs around the world. If you really want something to be true, then it's tempting to see things how you want to see them.

But if you're too invested in the results you want, you might not see the results that could help your business. You might be inclined to throw up your hands and say, 'well, that can't be. These tests are often wrong, anyway.'

We've been victims of it here at Grasshopper. A couple of years ago, we did extensive price testing because we wanted to maximize revenue, not just sign ups. One of our lower price points had the highest conversion rate, but we had chosen another price point as the favorite. Despite the test results, we rolled out the change anyway.

What happened? Well, we saw a 10% decrease in conversion. This didn't effect our overall revenue as the price point was a bit higher, but a 10% decrease is serious business! It shows how much you have to trust the test.

Sometimes, we're our own weakest link when it comes to testing, so in this post, we'll show you how to make sure your results are right to help guarantee that you know how to test before you screw up your revenue stream.

How to Get The Results You Want

Only Run A/B Tests

It’s tempting to test a bunch of different things at once, but A/B tests (or testing just two things against each other) is the simplest way to go. Other factors can come into play, test time needs to be longer, and results are less likely to be accurate if you bite off more than you can handle.

Josh Thompson, who works at My Golf Tutor, an online golf instructional website, tested two images on his landing page to see which one would perform better.

Josh resisted the urge to test five different images, focusing on two.

Consider Natural Fluctuations.

Almost all businesses have months that are super busy and months that are slow. For example, almost every year, we see sign ups decrease in December and pick up again in January.

For this reason, it’s important to use A/B tests and test two different things at the same time, rather than test one thing against a previous period.

Account for Possible Type I and Type II Errors

You may not be a seasoned statistician, but you’ve still got to know about potential errors before you start testing. The most common errors you’ll see are known as Type I and Type II errors.

A Type I error is basically when you get a false positive. This happens when there's no real difference between the two things you're testing, but the test does anyway.

Alternatively, a Type II error fails to show what is true, resulting in a false negative. To make this more clear, a Type II error would be if a patient had strep throat, but the patient's strep test showed he was strep-free.

Make Your Own Calculations.

Tools like Optimizely and Unbounce are helpful, but in order to make sure your results are as they seem, it’s best to make your own calculations based on how many visitors you get, natural fluctuations, and more.

Some A/B testing tools don’t publicly show the sample size required to make calculations, causing results that aren’t statistically significant. We came across a tool that only required 25 visitors to create test results-- that's not nearly enough visitors to get a real answer about whether or not a test is successful!

Run Tests Long Enough and Calculate Sample Size

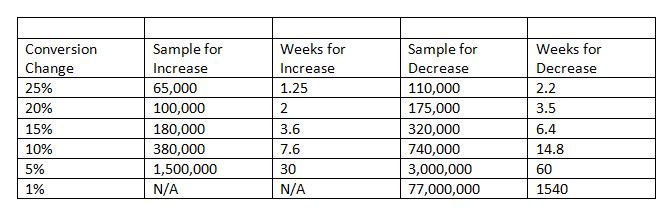

If you don’t calculate how long you’ll need to run your test, you won’t get accurate results. Here's a super handy chart for showing the length needed for a test based on how many visitors come to your site.

To see a 25% increase in conversion, you'll need 65,000 visitors over a span of 1.25 weeks. In order to ensure you're running the test for long enough, you'll have to do some calculations based on how many visitors come to your site. Signal vs. Noise has a great post about how to determine sample size that might help you out.

Trust Your Tests

Yeah, yeah, you really wanted that color change to make a difference in conversion, but if it didn't, it didn't. Your intuition might be good, but it's backed by heart, not data. If you're going to run a test, trust the results, even if you don't like them.

Closely Monitor After Deployment

Once you’ve determined that you’re going to make a change, get ready to closely monitor what happens. If you want to be successful, you have to make sure that your change met your expectations. If it didn’t, you need to go back and analyze what happened, what went wrong, and how you can improve future tests.

Consider Worst Case Scenarios

Before you get super happy about your results and start clinking glasses of champagne with your co-workers, consider the worst case scenario. What if you miscalculated and your deployment results in a 15% decrease in conversion? Will your business be ok if that happens? Will it be easy to change things back?

Test EVERYTHING

When you're make a change on your site, you might not consider conversion at all, but you have to. At Grasshopper, we test almost everything before we deploy it, just to make sure that what we're putting out there doesn't make things worse! If you test everything, you'll never be surprised with what happens, as long as you believe the test.

Testing Beats Intuition

Saul Torres, the Marketing Analyst for Global Healing Center, had heard free shipping might result in higher conversion rates, but he was apprehensive. His intuition told him it was a bad idea: What if it made no difference and the company was just giving away free shipping?

We kept hearing that free shipping was a great way to increase the conversion rate on our site but we were hesitant to put it on our site since we did not know what kind of return we would get if we offered it. Would it be worth it?

But A/B testing settled Saul's stomach. He sent 50% of the traffic to one page that had free shipping and 50% of the traffic to another page with the standard $2.99 shipping rate. What he found was a 20-25% increase in conversion rate on our products. That justified adding free shipping site wide. Saul trusted the test.

The A/B Test Did Its Job and So Can You!

When you're running an A/B test, it's extremely important that you're informed. If your results are going to better your business, then you have to be careful about how you formulate your tests, even if you're not a statistician.

So get going and get testing. We're waiting to see what you can do.

Your Turn: Have you done A/B testing for your small business website? What's worked? What hasn't? How do you ensure you get usable results?